In this post I would like to explain IP-Hash Load Balancing principles used for connecting VMware hosts to IP storage, like NFS, for better understanding how traffic balancement across various NICs occurs.

As reported in previous VMware: Uplink used by a VM in a LB NIC Teaming article Load Balancing policies are effective between vmnics and physical storage and all pSwitches inbetween.

Load Balancing does not occur between VMs, VMkernels and Port Groups in vSwitches.

IP-Hash Load Balancing is the LB technique that could potentially achieve the highest degree of efficiency since it uses different vmnics based on an algorithm that considers the destination IP address the packet has to be sent to and, unlike Route Based on originating virtual port ID it doesen't use a Round Robin vmnic assignation methodology.

Although IP-Hash Load Balancing lead to a better traffic load balancing it also brings the most complex set-up because it also requires particular configuration changes in all pSwitches packets will traverse to reach IP storage.

In pSwitches inbetween your VMware hosts and IP storage you will need to enable IEEE 802.3ad LACP, or Etherchannel if you deal with Cisco equipment, in order to benefit IP-Hash Load Balancing. LACP basically aggregates from 2 up to 8 ethernet channels providing a link with more aggregate bandwidth and resiliency.

Here's a simple connection schema, please bear in mind that in a real case scenario using a single pSwitch introduces a single point of failure in your infrastructure.

You should also consider VLAN implementation in order to isolate storage traffic. This, as you certainly know, is a thumb rule not just for storage traffic such as NFS or iSCSI but even for management traffic, vMotion traffic, FT traffic, etc.

Since my post is VMware related I will assume you have already configured LACP properly in your physical network. For more info on LACP setup please have a look at 1004048 KB.

Let's start by setting up our virtual networking. For sake of simplicity we will use just two vmnics.

We create a new vSwitch and a VMkernel for IP Storage (NFS in my case) assigning these two vmnics to it.

Please be sure that all vmnics are set as Active and that there are no vmnics set as Standby or Unused. IP-Hash Load Balancing must be set at vSwitch level and not overridden at VMkernel/PortGroup level.

IP-Hash Load Balancing algorithm chooses which vmnic utilize for any IP connection based on destination IP address upon the following equation:

vmnic used = [HEX(IP VMkernel) xor HEX(IP Storage)] mod (Number of vmnics)

where:

HEX indicates that the IP address has been converted in hexadecimal format. This is done octet by octet which means that for example 10.11.12.13 IP address in HEX base is 0A.0B.0C.0D that will be represented as 0x0A0B0C0D.

IP VMkernel is the IP address assigned to the VMkernel used for IP Storage

IP Storage is the IP address assigned to the NFS storage

xor is the exclusive or operand

mod is modulo operation

Now that we know how vmnic are choosen for data transfer let's examine how we can wisely assign IP addresses to our IP storage systems.

In this example I will use a NFS server with two NICs (no vIP). I've already assigned IP 192.168.116.10 to VMkernel responsible for carrying IP storage data.

1) Bad IP addresses choice

VMkernel = 192.168.116.10

NFS 1 = 192.168.116.50

NFS 2 = 192.168.116.60

Let's do some math:

vmnic used for NFS1 = [HEX(192.168.116.10) xor HEX(192.168.116.50)] mod (2)

= [0xC0A8740A xor 0xC0A87432] mod (2)

= [38] mod (2)

= 0 -> vmnic0 will be used

vmnic used for NFS2 = [HEX(192.168.116.10) xor HEX(192.168.116.60)] mod (2)

= [0xC0A8740A xor 0xC0A8743C] mod (2)

= [36] mod (2)

= 0 -> vmnic0 will be used

As you can see assigning these two IP addresses to NFS storage was a bad choice becase communications toward both NFS1 and NFS2 will utilize vmnic0 leaving vmnic1 unused.

2) Good IP addresses choice

VMkernel = 192.168.116.10

NFS 1 = 192.168.116.50

NFS 2 = 192.168.116.51

Let's do some math again:

vmnic used for NFS1 = [HEX(192.168.116.10) xor HEX(192.168.116.50)] mod (2)

= [0xC0A8740A xor 0xC0A87432] mod (2)

= [38] mod (2)

= 0 -> vmnic0 will be used

vmnic used for NFS2 = [HEX(192.168.116.10) xor HEX(192.168.116.51)] mod (2)

= [0xC0A8740A xor 0xC0A87433] mod (2)

= [39] mod (2)

= 1 -> vmnic1 will be used

These was a wise choice since communications with NFS1 will use vmnic0 and communications with NFS2 will use vmnic1 achieving traffic balance and both uplinks utilization.

Some useful links:

Best Practices for running VMware vSphere on Network Attached Storage

Sample configuration of EtherChannel / Link Aggregation Control Protocol (LACP) with ESXi/ESX and Cisco/HP switches

That's all!!

sabato 21 settembre 2013

lunedì 9 settembre 2013

VMware: Uplink used by a VM in a LB NIC Teaming

In this post I will explain how to show the uplink used by a VM in a vSwitch with NIC Teaming Load Balancing.

Since we all put our uplinks in a Load Balancing NIC Teaming sometimes it could be useful for troubleshooting purpouses to see what's the specific physical uplink used by that particular VM to access the network.

To explain this I will use a real case scenario that happened to me today.

A customer reported that after vMotioning a VM from one physical host to another this VM was unable to reach a specific IP Address in his network (a router, fyi).

I checked the basic networking settings from vSphere Client like vSwitch configuration and if every vmnic reported network activity (i.e. plugged cable) and everything was fine.

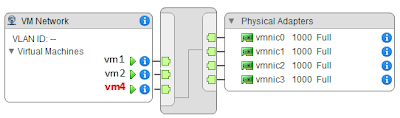

vSwitch configuration was similar to this:

vm4 was the VM reporting issues.

Analyzing vSwitch0 settings this was the result:

As you can see all vmnics (vmnic0, vmnic1, vmnic2, vmnic3) are set as Active and Route Based on originating virtual port ID was selected as Load Balancing method.

Next step was to take a look at physical networking. Customer has a blade enclosure with 3 hosts, each blade (i.e. ESXi physical host) with 4 NIC: vmnic0, vmnic1, vmnic2 and vmnic3.

Each NIC was connected to a different physical switch to achieve path realiability and every switch is connected to the one 'core switch' (second switch from the top in picture below) EXCEPT switch 4...and here all yours bells and whistles will ring.

To make this story short, as most of you have already understood, this was the flaw in this configuration. By per se it's not an issue having a switch not connected to other switches but in this scenario it's a fault because it's used in conjunction with Route Based on originating virtual port ID Load Balancing.

Route Based on originating virtual port ID it's based on a Round Robin uplink assignation. This means that every VM powered on recieve in sequence the next available uplink (vmnic) in the vSwitch.

Take a look at this picture for better understandment:

VM power-on sequence in this case was:

POWER-ON SEQ. UPLINK ASSIGNED

vm1 vmnic0

vm2 vmnic1

vm3 vmnic2

vm4 vmnic3

vm5 vmnic0

...and so on...let's pretend for example a new vm6 will be powered on this VM will use vmnic1. If vm3 and vm6 are powered off and vm6 is powered on back again it will use vmnic2 as uplink. Pretty simple, right?

In my case vm4 reported network issues reaching the router IP Address (the rightmost grey block on the picture above) because after vMotion from one host to another vm4 ended on a host's vSwitch which assigned it to vmnic3 which is connected to an isolated physical switch. This is easily solvable either by adding the missing intra-switch link or by removing vmnic3 from the Load Balancing active adapters.

After this theorethical prologue let's dive a bit into practice by explaining how it's possible to display which uplink (in a LB NIC Team) is used by a VM.

Let's login into our ESXi host.

To retrieve port informations for a particular VM we need to use the following command:

~ # esxcli network vm port list -w <World_ID>

Where <World_ID> is the World ID of our VM. This World ID uniquely identificates a VM in a host and changes every time a VM is vMotioned and a power status change occurs (i.e. vm is powered off and back on again).

To display World ID for our VMs we need to execute following command. Informations about every VM running in the host are retrieved. To keep things clean I only show you the output of vm4.

~ # esxcli vm process list

vm4

World ID: 3307679

Process ID: 0

VMX Cartel ID: 3307678

UUID: 42 19 4a 84 a3 52 c7 ca-d9 28 93 bf 20 6c 2d bc

Display Name: vm4

Config File: /vmfs/volumes/51dc35ee-c4b96dc0-1c1c-b4b53f5110c0/vm4/vm4.vmx

World ID: 3307679 if the value of interest.

If we run the command above this time specifying World ID we get:

~ # esxcli network vm port list -w 3307679

Port ID: 33554444

vSwitch: vSwitch0

Portgroup: VM Network

DVPort ID:

MAC Address: 00:50:56:99:45:3b

IP Address: 0.0.0.0

Team Uplink: vmnic3

Uplink Port ID: 33554438

Active Filters:

As you can see Team Uplink: vmnic3 indicates that vmnic3 is used by this VM.

That's all!

Since we all put our uplinks in a Load Balancing NIC Teaming sometimes it could be useful for troubleshooting purpouses to see what's the specific physical uplink used by that particular VM to access the network.

To explain this I will use a real case scenario that happened to me today.

A customer reported that after vMotioning a VM from one physical host to another this VM was unable to reach a specific IP Address in his network (a router, fyi).

I checked the basic networking settings from vSphere Client like vSwitch configuration and if every vmnic reported network activity (i.e. plugged cable) and everything was fine.

vSwitch configuration was similar to this:

vm4 was the VM reporting issues.

Analyzing vSwitch0 settings this was the result:

As you can see all vmnics (vmnic0, vmnic1, vmnic2, vmnic3) are set as Active and Route Based on originating virtual port ID was selected as Load Balancing method.

Next step was to take a look at physical networking. Customer has a blade enclosure with 3 hosts, each blade (i.e. ESXi physical host) with 4 NIC: vmnic0, vmnic1, vmnic2 and vmnic3.

Each NIC was connected to a different physical switch to achieve path realiability and every switch is connected to the one 'core switch' (second switch from the top in picture below) EXCEPT switch 4...and here all yours bells and whistles will ring.

To make this story short, as most of you have already understood, this was the flaw in this configuration. By per se it's not an issue having a switch not connected to other switches but in this scenario it's a fault because it's used in conjunction with Route Based on originating virtual port ID Load Balancing.

Route Based on originating virtual port ID it's based on a Round Robin uplink assignation. This means that every VM powered on recieve in sequence the next available uplink (vmnic) in the vSwitch.

Take a look at this picture for better understandment:

VM power-on sequence in this case was:

POWER-ON SEQ. UPLINK ASSIGNED

vm1 vmnic0

vm2 vmnic1

vm3 vmnic2

vm4 vmnic3

vm5 vmnic0

...and so on...let's pretend for example a new vm6 will be powered on this VM will use vmnic1. If vm3 and vm6 are powered off and vm6 is powered on back again it will use vmnic2 as uplink. Pretty simple, right?

In my case vm4 reported network issues reaching the router IP Address (the rightmost grey block on the picture above) because after vMotion from one host to another vm4 ended on a host's vSwitch which assigned it to vmnic3 which is connected to an isolated physical switch. This is easily solvable either by adding the missing intra-switch link or by removing vmnic3 from the Load Balancing active adapters.

After this theorethical prologue let's dive a bit into practice by explaining how it's possible to display which uplink (in a LB NIC Team) is used by a VM.

Let's login into our ESXi host.

To retrieve port informations for a particular VM we need to use the following command:

~ # esxcli network vm port list -w <World_ID>

Where <World_ID> is the World ID of our VM. This World ID uniquely identificates a VM in a host and changes every time a VM is vMotioned and a power status change occurs (i.e. vm is powered off and back on again).

To display World ID for our VMs we need to execute following command. Informations about every VM running in the host are retrieved. To keep things clean I only show you the output of vm4.

~ # esxcli vm process list

vm4

World ID: 3307679

Process ID: 0

VMX Cartel ID: 3307678

UUID: 42 19 4a 84 a3 52 c7 ca-d9 28 93 bf 20 6c 2d bc

Display Name: vm4

Config File: /vmfs/volumes/51dc35ee-c4b96dc0-1c1c-b4b53f5110c0/vm4/vm4.vmx

World ID: 3307679 if the value of interest.

If we run the command above this time specifying World ID we get:

~ # esxcli network vm port list -w 3307679

Port ID: 33554444

vSwitch: vSwitch0

Portgroup: VM Network

DVPort ID:

MAC Address: 00:50:56:99:45:3b

IP Address: 0.0.0.0

Team Uplink: vmnic3

Uplink Port ID: 33554438

Active Filters:

As you can see Team Uplink: vmnic3 indicates that vmnic3 is used by this VM.

That's all!

Iscriviti a:

Commenti (Atom)